Politics & Society

Should we work with or turn off AI?

The law is always behind technology but given the sweeping changes heralded by new technologies, legislators need to get in front of the issue

Published 17 February 2020

Whether our online job applications are successful or not, whether our insurance policies are approved, even whether our products cost a higher or lower price, are often being determined by the ‘data detritus’ we leave behind.

Tracked by cookies that follow our interests and behaviours, this use of our data trails is only becoming more insidious as bigger data sets are being manipulated by increasingly sophisticated algorithms, resulting in what business academic Professor Shoshana Zuboff has called “surveillance capitalism”.

In addition to concerns about civil liberties and human rights being undermined, democracy itself is being challenged by processes that tailor propaganda to individuals and aren’t subject to scrutiny.

The world as we know it is being changed by invisible computer programs, and the law is just not keeping up.

The “robodebt” scandal over the Australian government’s automated calculation of welfare debts is a high-profile example of what is going wrong – thousands of people were harassed and stressed by debt notices for money they didn’t owe.

People were offered little recourse or appeal, and the onus was on those receiving the letters to prove that there was no debt, often dating back years, rather than on the government to prove the debt was legitimate.

Politics & Society

Should we work with or turn off AI?

In November last year, the government halted the program – later conceding it was unlawful. It has since become evident that they had already been advised earlier that it was illegal.

The way that ‘robodebt’ collection has been conducted raises urgent questions about how the executive branch and Australian law is responding to the new capabilities of technology.

But don’t we already have laws that can simply be applied to new technology? After all, a crime is a crime whether it is committed by paper or computer, isn’t it?

Well, yes and no.

Certainly, there is a lot of law that already applies to consumers and citizens. Human rights, consumer rights, corporate rights (even arguably board responsibilities), tort and contract law, just to name a few, all protect us in certain ways.

But the technological advances we are living through present special challenges.

For example, given the length of the terms and conditions on each of the online contracts people have to consent to when they sign up for different apps, it is questionable whether the term ‘consent’ can even have any meaning in a digital age where people never take the time to read the contracts in the first place.

People, of course, can and are taking legal action to protect their rights, but this is a slow process using old law designed for another age.

International ride-share giant Uber is facing a class action from Australian taxi drivers, while another class action has been launched against the government over the robodebt scandal.

But when litigation is being brought, the onus is on the consumer or citizen to prove that there is a problem. This leaves an enormous gap for corporations and governments to act with potential impunity, or at least a lag between their poor behaviour and consumer rights being restored.

Politics & Society

Holding a black mirror up to artificial intelligence

At a conference held by the Consumer Policy Research Centre in November last year, Australian Human Rights Commissioner Edward Santow referred to this gap as ‘in due course’ (ie in due course it was fixed).

But as Santow also pointed out, in the time period of those three little words, a lot can go wrong.

For example in the case of robodebt, some of the most vulnerable people in Australia were unfairly harassed for debt they didn’t owe.

The stress and harm caused by these automated decisions, without accountability and transparency, is impossible to accurately calculate.

New regulations to respond to the use of technology are coming out regularly in Australia, the most notable is the new Consumer Data Right Legislation, passed by the Australian government on 1 August 2019.

But these new and piecemeal legislative responses aren’t enough.

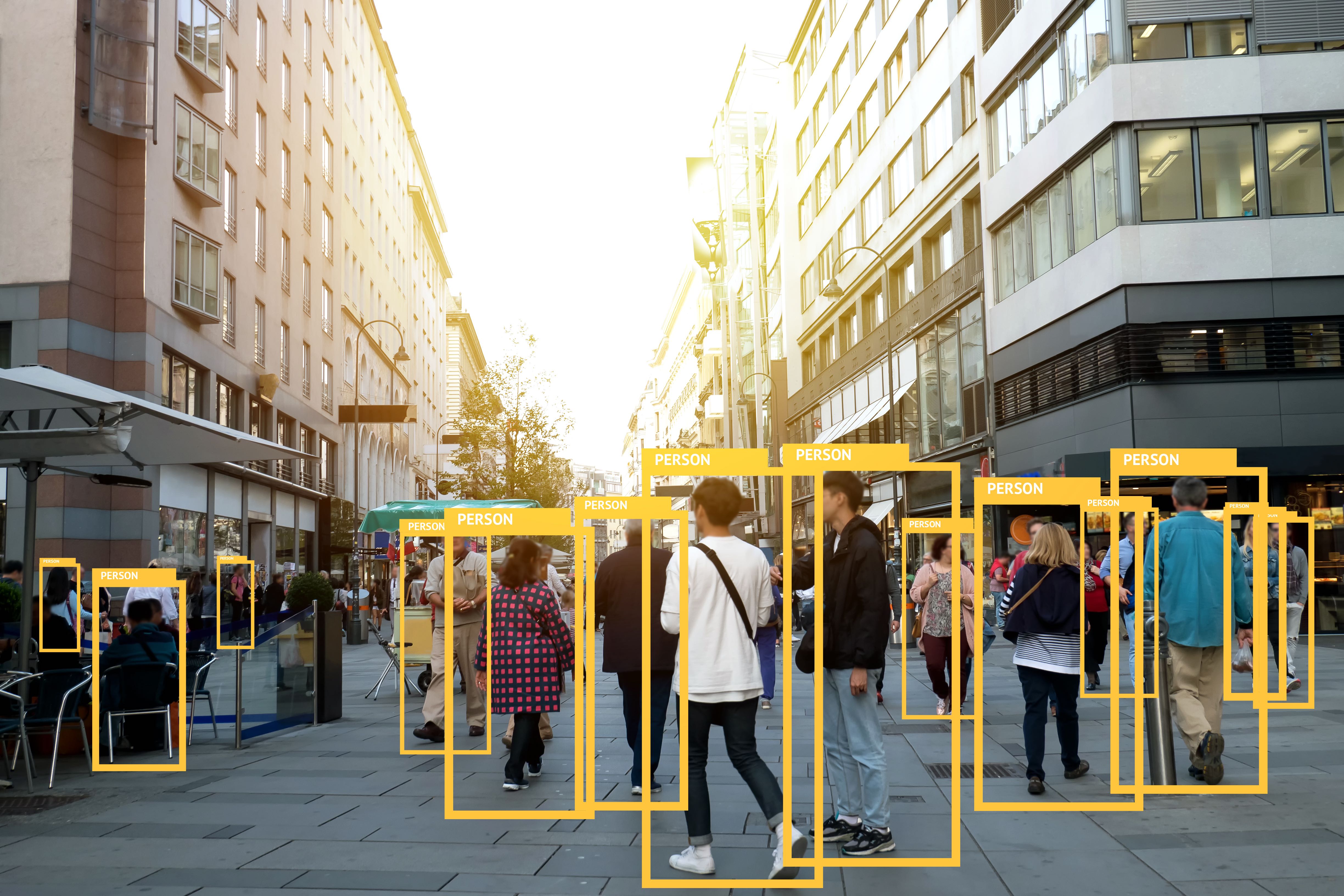

Some of this legislation is in response to an increase in the number of cases of automated decisions, lacking transparency or oversight, that are made about our everyday lives.

But some is based on the government’s desire to profit from the rise in capabilities of collecting data, and this can be seen in the rise of the use of biometric data (mostly facial recognition) from councils in Perth to trials in public schools in Victoria.

The law is always behind the technology – if law were to change in response to every technology, it is argued, it couldn’t be relied upon.

But the sweeping nature of the new technologies we are dealing with means legislators need to get more in front of the issue, rather than confront it piecemeal, or with a lag that allows the damage discussed above, or even worse.

Sciences & Technology

Why does artificial intelligence discriminate?

Issues of cybersecurity, democracy, rights and consumer protection all go hand in hand.

Legislating on these issue individually, often without the necessary technical wherewithal to fully understand or appreciate the implications and amid heavy lobbying by technology companies, the risk is that potential outcomes are skewed against citizens and consumers.

One response has been a focus on better embedding ethics into machine learning.

There are numerous movements in ethics – FAT (Fairness, Accountability and Transparency) and Ethics by Design – and many corporations and even universities are embedding staff to represent an ethical point of view in the design of technology.

Hundreds of papers are circulating the internet, from both government and industry, outlining their ethics strategy, often without implementation mechanisms or clarification of terms.

But a focus on ethics won’t be enough.

The recent Royal Commission into misconduct in the finance sector for example highlights the short comings of relying on ethics to protect consumer rights.

We don’t yet have model laws, but there are many working to build them.

These laws need to place the onus squarely back on the companies building the technology to comply with long-standing law and principles, and be able to regularly provide audits to show that this is the case.

When cars were first introduced onto roads, there was no term for jaywalking.

This was a term later introduced by the car industry to place the responsibility back on pedestrians when they were hit by cars. However, over time, we developed laws that protected pedestrians, and road rules now make clear that car drivers are responsible.

Sciences & Technology

The ‘personality’ in artificial intelligence

At the moment, by focusing on the individual responsibilities of the consumer or citizen, technology companies are effectively accusing technology users of jaywalking.

Eventually, this can be expected to shift and place greater responsibility on the technology companies to ensure that their tools don’t remove human autonomy, undermine democracies and fundamentally ignore basic human rights and legal obligations.

US Politicians such as Bernie Saunders and Elizabeth Warren are calling for antitrust rules to break up the big tech companies.

In Australia, the Australian Competition and Consumer Commission is suing Google over allegations it misled consumers on the use of their personal location data.

Consumers are being exploited, and the awareness of citizens and consumers is changing. Now is the time for their rights to be protected, and for systems to be built with existing law in mind, and democratic values to be upheld.

Banner: Getty Images