Sciences & Technology

Banning kids from social media? There’s a better way

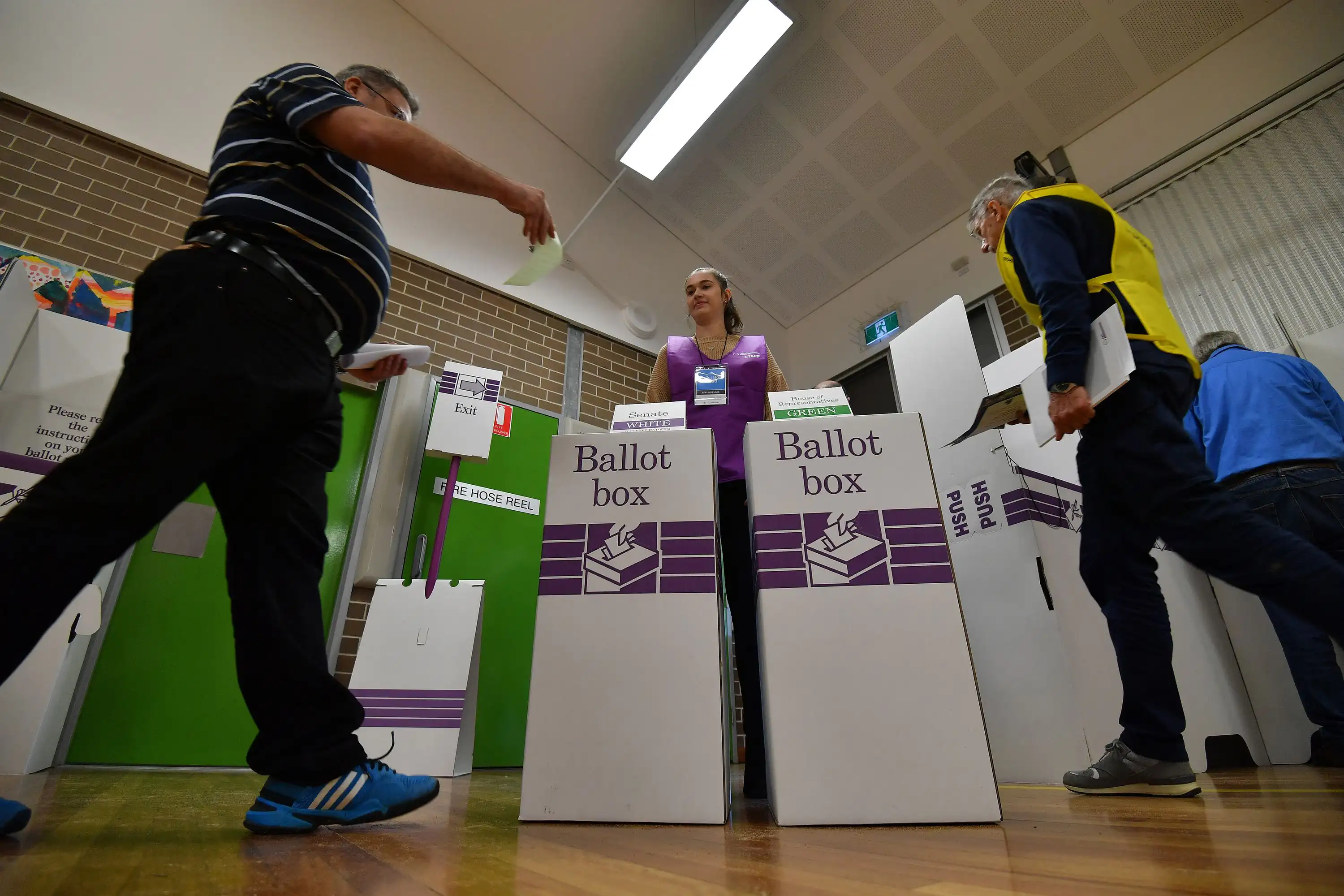

AI algorithms have completely altered the way we consume information. So what does this mean for our decision-making come election time?

Published 29 October 2024

There’s been no shortage of talk during this US election campaign about the role social media is playing in influencing how Americans vote.

From an AI-conjured image of Taylor Swift supposedly backing Trump, to an army of bots spreading propaganda on X, we’ve seen that the ability of algorithms to distribute misinformation in harmful ways is very real.

But it’s not just misinformation, it’s the fact that we have little to no control over our feeds, and the psychological consequences of that on our ability to make rational decisions that could be the real problem with these algorithms.

Social media wasn’t always like this.

When I first started using social media, it was in the early days of Facebook.

I remember FarmVille and the massive photo albums that would appear after a night out with friends. I’m even old enough to remember the tail end of MySpace and the AOL chatrooms.

Sciences & Technology

Banning kids from social media? There’s a better way

My sister was once on an MTV dating show called Meet or Delete, where she was given access to 10 potential suitors’ hard drives.

She could peruse them as she saw fit, and she would decide from there if she’d date them or not. The show didn’t last very long, but I still find the concept funny.

The internet was a different place back then. It was an extension of your physical social network.

What you saw when you scrolled through Facebook was a chronological listing of what your friends or favourite celebrities had posted.

There was no magic other than horrible graphic design as we all taught ourselves basic HTML.

I don’t mean to induce nostalgia for a bygone era but rather to remind you of what social media used to be.

Before our cousins found themselves hurled into a right- or left-wing vortex of lies and misinformation. Before Facebook became one of the main reasons that family dinners are now so excruciating.

In 2018, Facebook introduced a sorting algorithm. So rather than just seeing a chronological listing, you saw what a computer in Menlo Park, California – home to the corporate headquarters of Facebook (now Meta) – thought might be most relevant to you.

For a while, a lot of us manually turned that feature off, preferring the old-fashioned way.

But eventually that became too difficult, so we put our trust in the algorithm. Twitter, Instagram and YouTube joined suit in the years that followed.

We are now living through the consequences.

Politics & Society

Disinformation damages democracy, but perhaps not in the way you think

Misinformation is nothing new, especially in the exceptionally flashy politics of the United States.

The advent of every new communication technology has seen the rise of some social disruption.

The radio brought Father Charles Coughlin, a religious and political broadcaster who reached millions when he spread his pro-fascist views on air.

It also brought about the American wing of the Nazi party, which famously rallied in 1939 in Madison Square Garden.

TV showed us images of the Vietnam War and stoked the protests of the late 1960s. Social media brought us the modern far right.

I would argue that the misinformation and fake news aided by AI today is also nothing new. It is a cousin of everything that we’ve seen before. It is a human problem that’s been attached to a technology buzzword.

What’s new and has me more worried is that the algorithms behind the new generations of Facebook, Twitter and all the rest are sending us down a different path to the social media I grew up with.

If we think of AI systems as computers that have some capacity to make decisions, then we’re now separated from making our own decisions about the information we consume.

This means that our experience with AI is fundamentally alienating – something we’re yet to grapple with as a society.

We’re used to having technology as an extension of how we interact with the world, but AI presents the first time a piece of technology can interact in some ways on its own in the world (or at least that’s how it feels to many people).

At the highest level, we need to reckon with the question of what AI systems are.

Can they be held legally responsible for what they create and recommend? Who owns the copyright for the material they produce?

Given that AI learns right from wrong in the same way that humans do, by seeing and collecting data from the world around us, can we say that these systems are really making decisions on their own, or are they an extension (however abstracted) of us?

The alienation that we experience when we’re divorced from decisions about what we see is powerful and often causes a fear reaction.

It’s the unmooring that millions of people across the United States and the world are feeling – particularly palpable during a divisive and polarising election campaign – driven in part by the wedge that has emerged between them and the information they consume.

Maybe it's the psychological hit we take from this loss of control and the effect this has on our capacity for rational decision-making that's the real problem, even more so than the misinformation itself.

Trust is important – it underpins the legitimacy of our democracies. The danger of AI is that we risk weakening our trust across society. If we aren’t careful in how we think about and manage AI, we risk weakening our democracies even further.

Cory Alpert served for three years in the Biden-Harris administration and was previously a senior staffer on Pete Buttigieg's historic 2020 Presidential campaign. He was the Senior Advisor to the President of the US Conference of Mayors and helped build the humanitarian assistance technology to respond to major disasters across the US and Ukraine.

He is now completing a PhD at the University of Melbourne studying the impact of AI on democracy.

Cory was part of a debate on the US election on 15 October 2024. This was the third event in the In Pursuit of Knowledge series, which brings together University of Melbourne experts to examine and illuminate the critical issues of our time.