Sciences & Technology

Quantum leap in computer simulation

Quantum computing could stream-line financial calculations, optimise the workings of complex systems like logistical networks and enhance artificial intelligence. So how hard can it be?

Published 3 October 2018

So, you want to build and program a quantum computer. Why not?

Quantum computers could solve some large mathematical problems that classical computers would need the entire life of the universe to come near solving.

Their ability to quickly process certain complex problems could potentially yield new insights into molecular chemistry and biology, opening the way for new drugs and clever materials.

They could stream-line financial calculations, optimise the workings of complex systems like logistical networks and could enhance artificial intelligence. So how hard can it be?

Very.

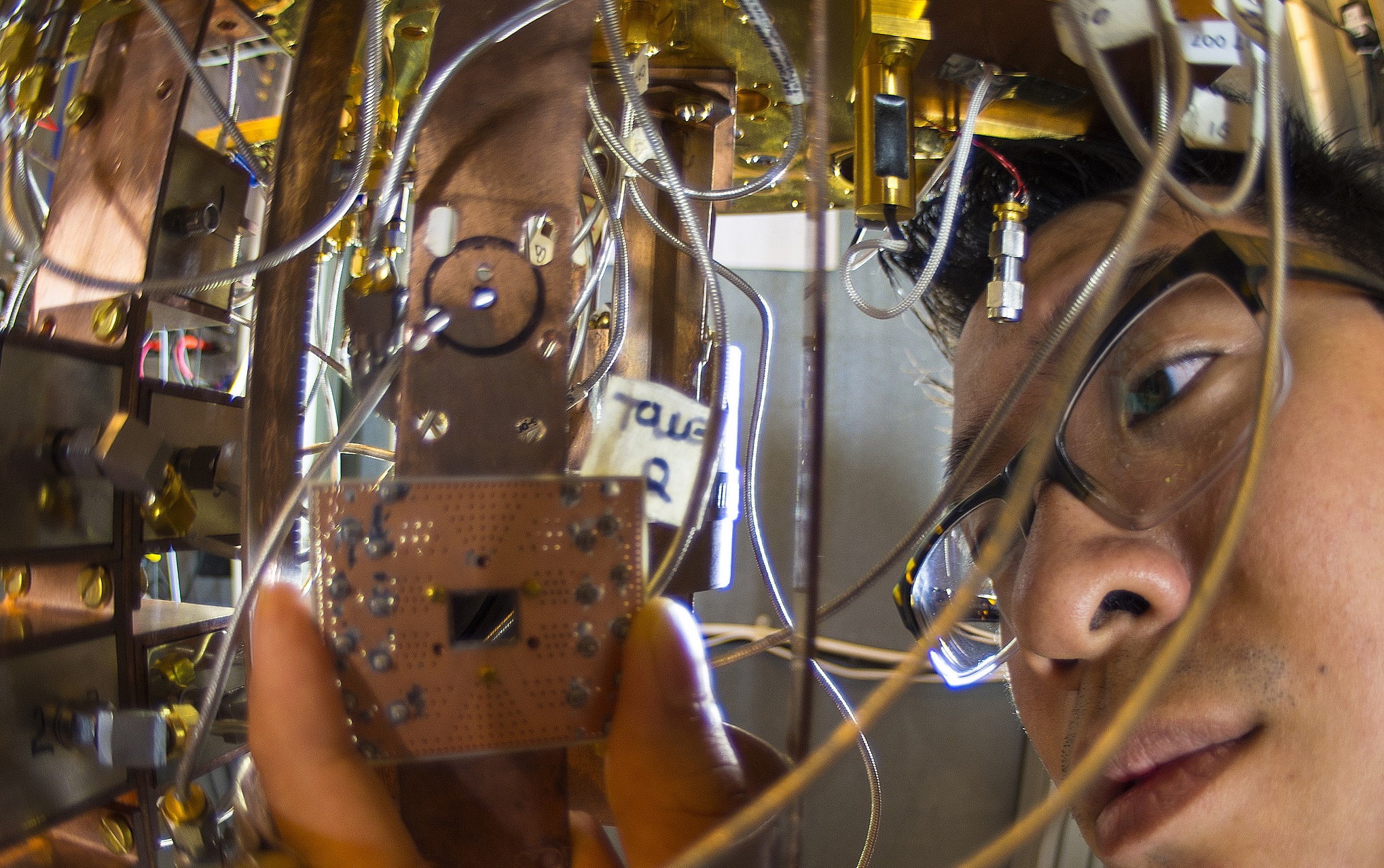

First up you need to be operating with quantum elements, like atomic or subatomic particles (electrons and photons), or microscopic circuits that are so small they behave quantum mechanically.

Sciences & Technology

Quantum leap in computer simulation

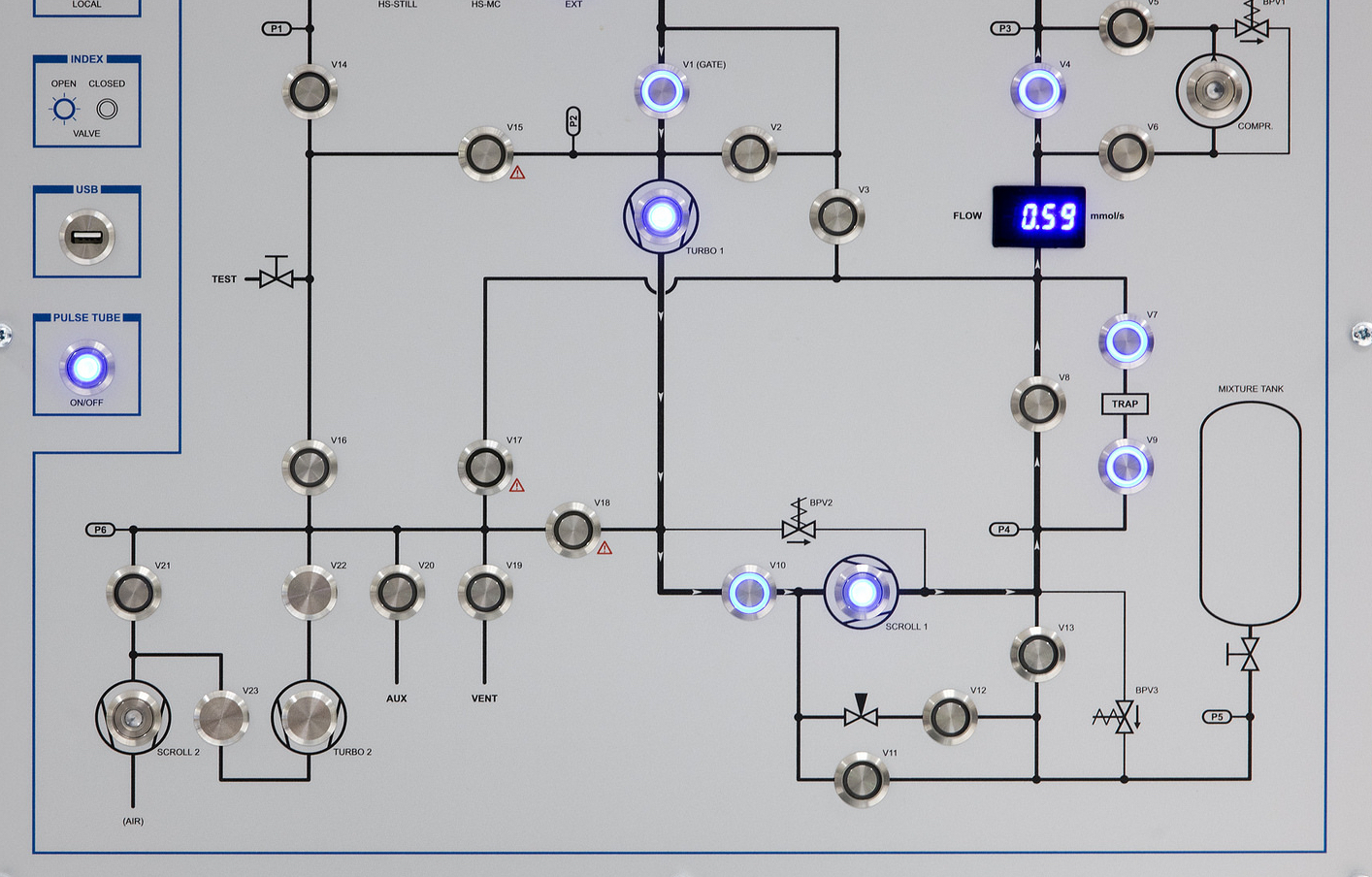

You’ll need to keep the system as free as possible of the tiniest environmental disturbance. That means you’ll likely need a vacuum, or a fridge powerful enough to keep the system colder than outer space – or almost 273 degrees below zero Celsius.

You’ll also need to cope with quantum states between the elements that collapse after the tiniest fraction of a second.

Finally, you will need a special form of error correction because quantum states are so fragile that quantum computers are prone to constant errors. And these errors are difficult to check because as soon as you measure a quantum state it will collapse.

Any usefully-scaled quantum computer, then, won’t be a laptop any time soon. It will be a large machine accessed remotely.

“It is a very complex, interdisciplinary challenge requiring physics and maths, but also materials science, engineering and computer science,” says Professor Lloyd Hollenberg, the Thomas Baker Chair at the University of Melbourne and a leading researcher on quantum science and technology.

Google, IBM, Intel and Microsoft are just some of the biggest names now trying to develop practical quantum computers.

Australian companies are involved too: Silicon Quantum Computing is developing a quantum computer based on silicon, Q-CRTL build tools to measure and analyse system performance and optimise controls, and QxBranch build applications focused on forecasting and optimisation.

So far there are only small working prototypes that can’t yet do anything practical that a classical supercomputer can’t, but the pace of development is accelerating.

So how does a quantum computer work differently from a classical computer?

Sciences & Technology

Quantum boost for medical imaging

Dr Charles Hill, a University of Melbourne research fellow in the Centre for Quantum Computation and Communication Technology, likens the difference to the way each machine would go about finding a needle in a haystack.

While a classical computer algorithm would crunch through every hay straw as fast as it could, a quantum computer algorithm takes advantage of the weird physics of quantum objects to zero-in on the most probable solution and then progressively uncover the actual needle.

“The effect is to make the needle you are looking for progressively much bigger than the haystack itself until all that is left is the needle,” says Dr Hill.

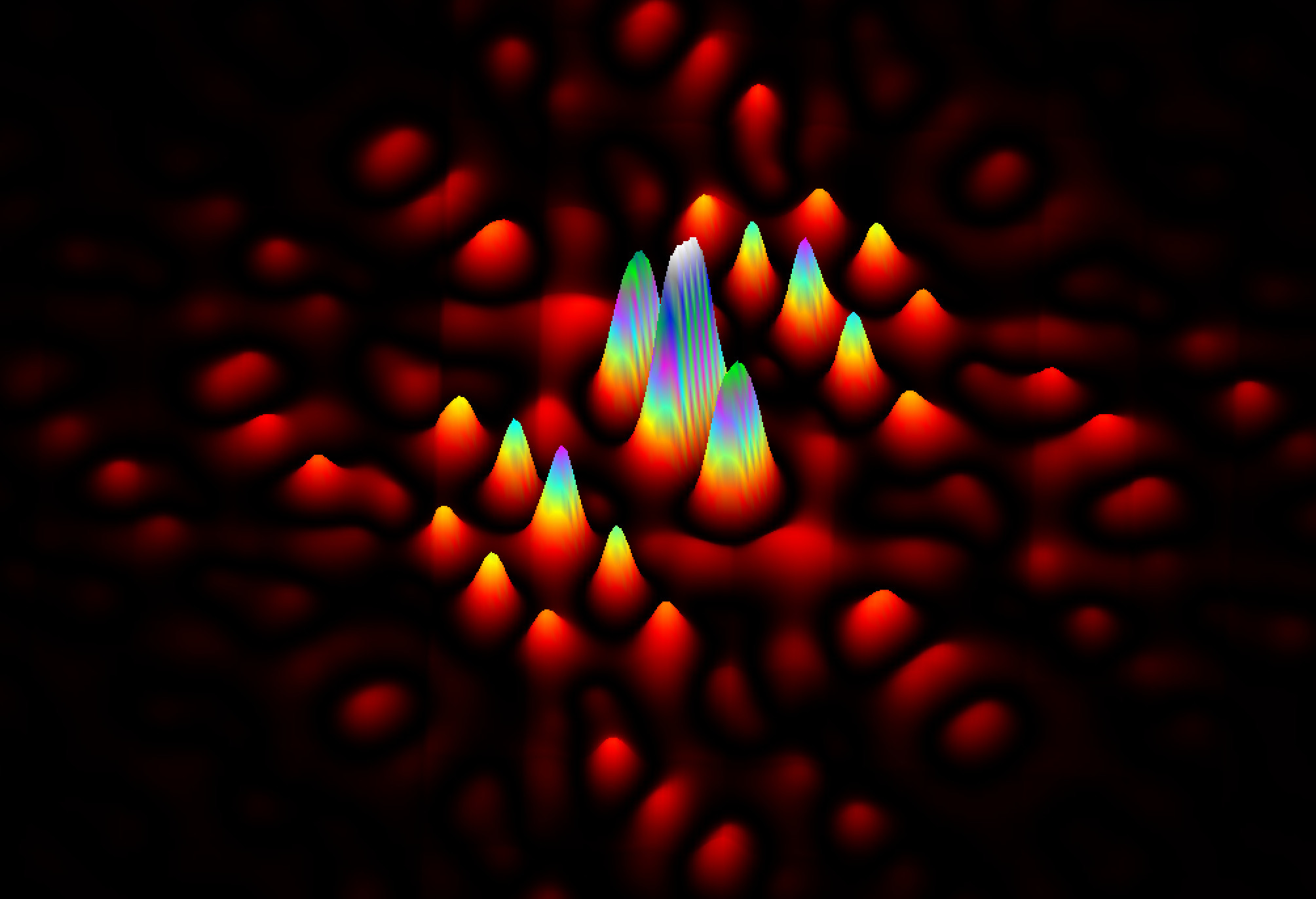

The weird physics comes from the sub-atomic quantum environment where the comforting certainties of the laws of classical physics begin to blur into probabilities. In this nano-scale world, objects like particles seem to exist in a sort of suspended state of unresolved probability.

Physicist Erwin Schrödinger tried to explain it with his concept of the cat in a box being neither dead or alive until you open the box and take a look.

What is even weirder is that particles can unaccountably correlate with each other’s state in a phenomenon called “entanglement” that Albert Einstein himself called “spooky”.

The good news is that it’s ok to be unsettled by this spooky stuff but still understand quantum computing and use the technology. Danish physicist and Nobel Laureate Niels Bohr once famously said “anyone who is not shocked by quantum theory has not understood it.”

Classical computers consist of microscopic transistors, or switches, that can be either off or on, creating the simple binary mechanism by which information is encoded so that a computer can process data.

Sciences & Technology

Spinning diamonds for quantum precision

These transistors can be made into “bits” of information by having on or off represented as two numbers, 1 or 0.

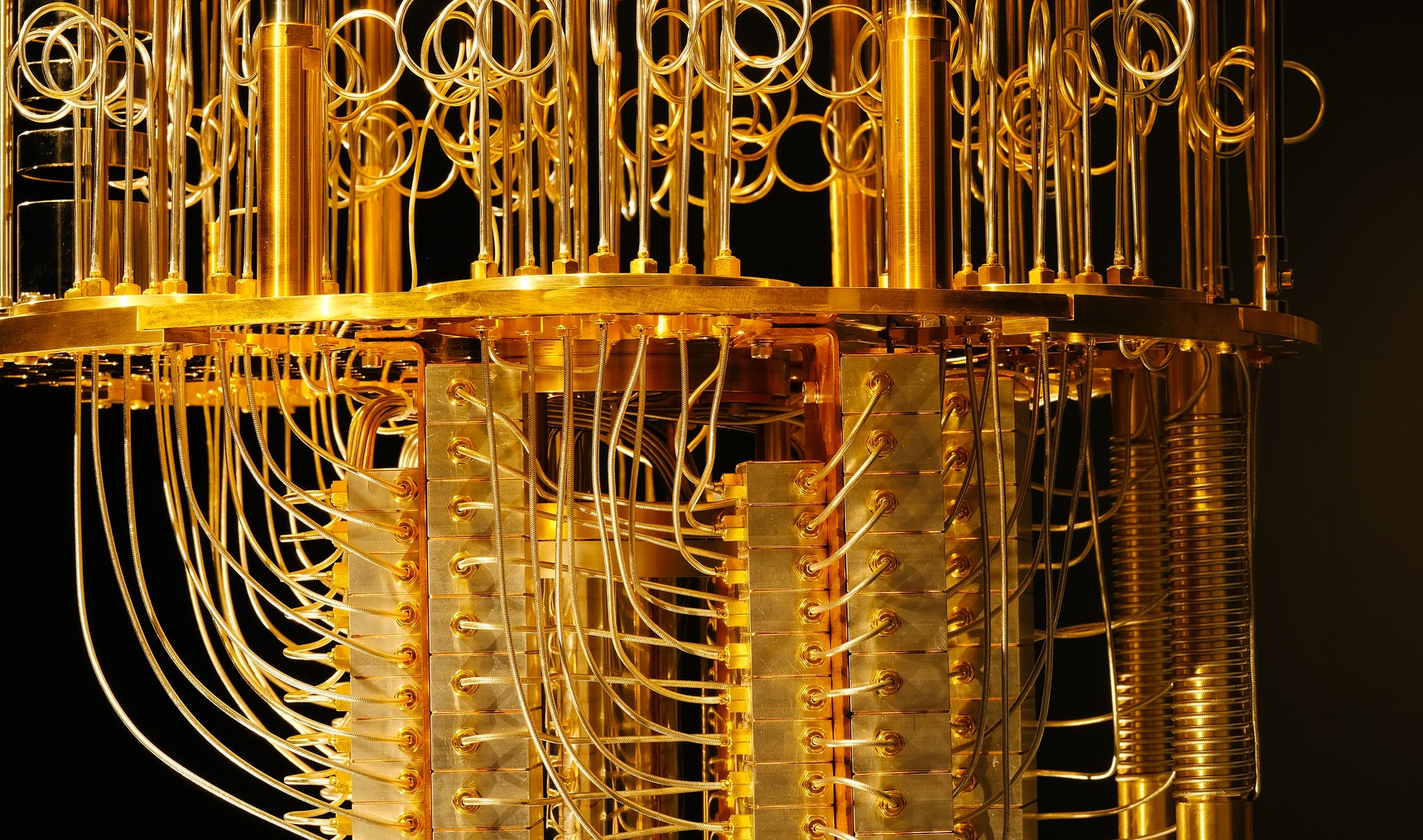

A quantum computer uses a quantum version of bits, “qubits”, in which the transistor-equivalents are quantum mechanical elements, like atoms, electrons, particles of light, or even quantum superconducting circuits that conduct electricity with no resistance. Like a transistor, these objects can also be in either of two states, labelled as 1 or 0.

But the big advantage of quantum bits is that because of the weird physics, quantum objects can also be coerced into a kind of non-committal existence, in which their actual state is unresolved and is instead a matter of probability of measuring one state or the other.

This means that while two classical “bits” can be in only one of four combinations, two quantum bits can, in a sense, be potentially all four combinations at once.

This is “superposition” and is partly what makes quantum computers potentially so powerfully efficient at some tasks.

The real computational efficiency is most likely powered by “entanglement” in which the probable state of one quantum object, once it is finally measured, correlates with the state of other quantum objects it is entangled with.

Ultimately, we don’t know why the quantum world is dominated by superposition and entanglement, but scientists are able to write computer algorithms that harness these properties.

“Through superposition and entanglement the numbers encoded on the states of qubits effectively come alive in the sense they ‘know’ about each other.

“These deep quantum mechanical connections throughout the information set can be manipulated by the user to process the data according to the quantum algorithm being run,” says Professor Hollenberg.

“Quantum algorithms basically implement quantum interactions among the qubits to magnify certain data probabilities amongst the cacophony of possibilities until it zeros in on your answer,” he says.

Sciences & Technology

A big discovery in a tiny package

For example, a classical computer would take more than the entire history of the universe to decode some of the encryption we are now using to guard our online financial transactions. But a large enough quantum computer could choreograph the probabilities within quantum states to break the code, theoretically, within days or weeks.

Professor Hollenberg says a full-scale quantum computer big enough to beat encryption would need to comprise millions of qubits. To put that in perspective, the largest prototypes are now around 50 qubits.

“But that prototypes, such as the IBM-Q machine, exist at all is remarkable, and the ability to remotely access and program these systems provides a valuable experience in the reality of quantum computing,” says Professor Hollenberg.

The reason a practical quantum computer needs so many qubits is because computer engineers need to build in redundant qubits to check for errors.

“Error correction in quantum computing is a major challenge because you can’t look directly at a qubit to see if something is going wrong – when you do the quantum state collapses.

“You have to use other essentially disposable qubits to which the information about the qubit data is transferred that we can then measure and so infer the veracity of the quantum information in question,” says Professor Hollenberg.

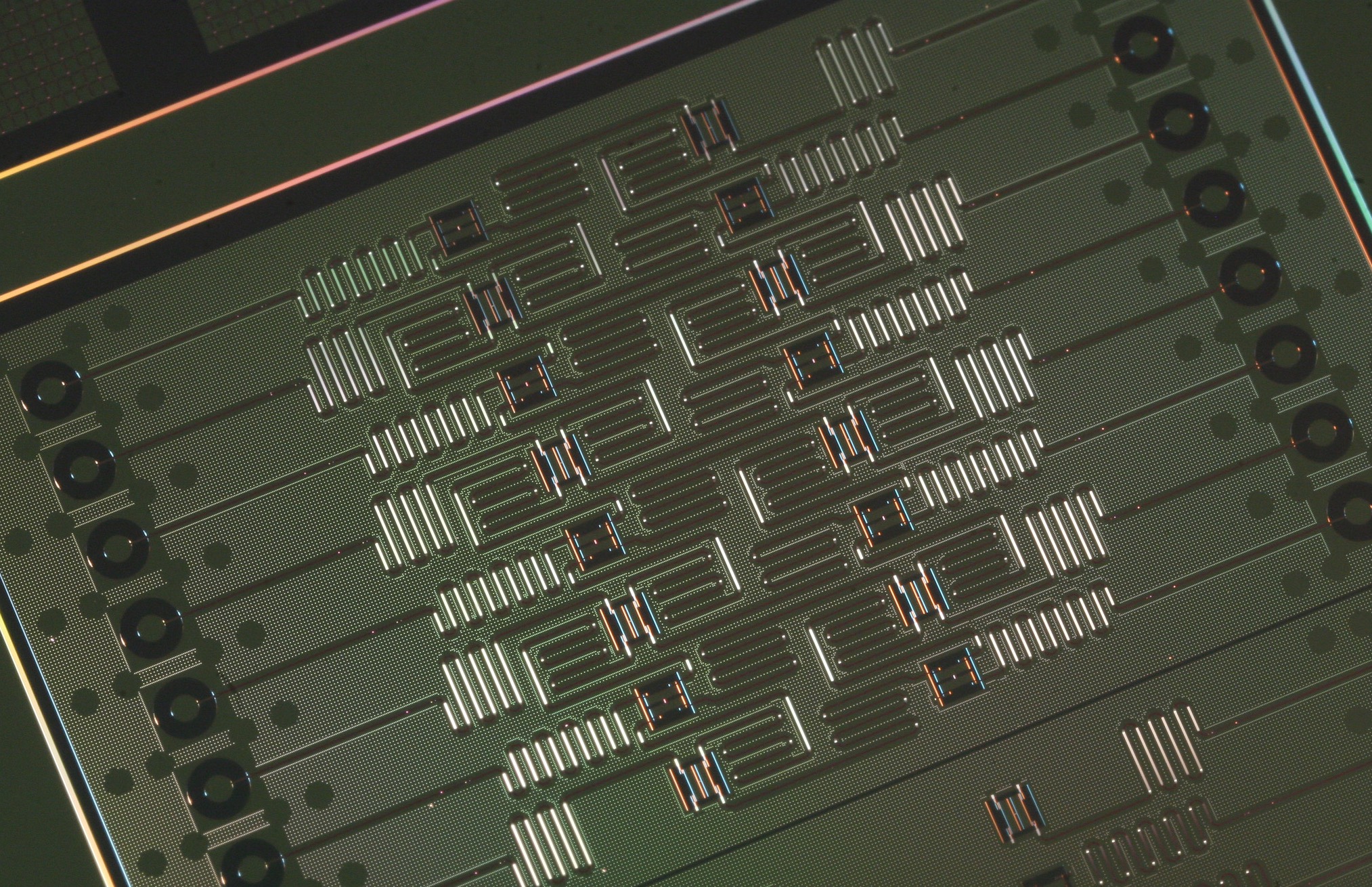

All this requires some pretty fancy nano-scale wiring and quantum computer design. One of the ongoing challenges in scaling up a quantum computer is staying on top of this error correction with clever coding to minimise the number of qubits, and engineering to maximise space.

One of the few quantum computer architecture designs that addresses these problems was developed by Dr Hill and Professor Hollenberg, and is now at the heart of Australia’s effort to build silicon-based quantum computer hardware that is being led by Australian of the Year Professor Michelle Simmons at the University of New South Wales.

“Often designs for quantum computers only consider the tip of the ice-berg – scaling-up the number of qubits – but fail to consider how the machine will actually operate in order to successfully implement quantum error correction. Designing around the realities of quantum error correction has been a particular focus of our approach,” says Professor Hollenberg.

Sciences & Technology

How a mathematical equation opened a new frontier in nanotechnology

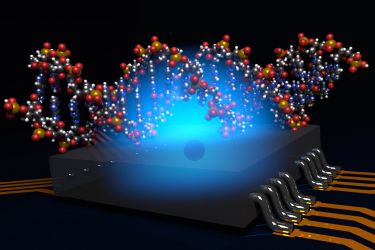

“One of the major advantages of this silicon-based approach is that the systems encode information on individual phosphorous atoms in the silicon crystal, which means the overall system can be quite small,” he says.

“But therein lies another challenge that we address in our designs – how to control atomic elements that are so close to each other.”

“Qubits based on single phosphorous atoms are of course incredibly small, but remarkably researchers in the group of Professor Sven Rogge at the University of New South Wales can actually image the quantum wave function using a scanning tunnelling microscope.”

Direct comparisons between the way these phosphorous atom qubits work and the theory developed by Dr Muhammad Usman and others in Professor Hollenberg’s group, shows that these qubits are extremely well understood. This, says Professor Hollenberg, is a critical factor in creating the quantum computer design.

So how close are we to having a useful quantum computer?

Professor Hollenberg says the technology is moving very quickly. Within the next five to 10 years we are likely to have reliable quantum computers of between 100 and 1000 qubits, which may be large enough and precise enough to start solving some real problems.

As the hardware develops, the machines themselves may look very different to what we now envisage. But what we do know is that the area will be increasingly dominated by the urgent need to develop quantum computing software – the ability to program and map problems to a quantum setting.

“End-users who seek to capture quantum advantage and be part of the journey will need to start getting quantum-ready now.”

But he says a full-scale error corrected quantum computer in the millions of qubits is still a way off. And between now and then Professor Hollenberg believes it’s possible that further discoveries could yet shrink the timeframe, or even change the direction of quantum computing.

“In my experience, based on 20 years working in this area, quantum mechanics always surprises. I’m pretty sure something will happen over the next ten to 20 years that will change the story again.

The road to full scale quantum computing is still suitably quantum.

Find out about University of Melbourne’s IBM Quantum Hub.

Banner image: Graham Carlow/IBM