Should school rankings matter?

In Victoria the VCE40+ figure is commonly used to rank schools – but is this a fair reflection of school quality?

Published 12 December 2017

It is December, which means Australian high school graduates are anxiously awaiting their final school scores. But their schools are just as anxious because the scores will be publicly used to rank them, and the public and private schools systems, against each other.

In a state like Victoria these rankings will be based on a number so broad it’s of little use on its own, or worse, based on meaningless and misleading data.

Moreover, they routinely disadvantage top performing government schools. And yet many parents use them to decide where to send their children to school.

So why are the rankings flawed, and can we do better?

In Victoria, most rankings use the percentage of raw study scores of 40 out of 50 and above achieved at a given school in the Victorian Certificate of Education assessment, the so-called VCE40+. But this number is meaningless for several reasons.

First, raw study scores are not meant to be compared across subjects; doing so is comparing apples and oranges because raw scores measure the achievement relative to peers in the same subject.

Second, the threshold of 40 is arbitrary. The parents of a struggling student might be interested in how well a school performs at a lower threshold. However, the parents of a student who wants a score high enough to get into a prestigious law course would find 40 too low.

Third, for an individual student, it is important to know what subjects a school is producing 40+ scores in, rather than the overall 40+ performance. Someone who is interested in arts but not sciences may not like a high-ATAR school that gets most of its high scores in the sciences and none at all in the arts.

The problem with using raw scores

Before raw VCE study scores can be compared across subjects to produce an ATAR, they need to be scaled. Raw scores measure achievement relative to peers in the same subject. Scaled scores are adjusted to account for differences in student cohorts taking each subject. Scaled scores produce a result as though all Year 12 students had taken the subject.

Scaled scores can be substantially different from raw scores. A raw study score of 40 in Food and Technology can, for example, become 35 after scaling. A raw score of 40 in Latin could translate into 53. This is why raw scores are scaled to produce an Australian Tertiary Admission Rank (ATAR) for each student, used by universities and other tertiary institutions for selection.

But VCE40+ rankings treat both scores as though they are the same.

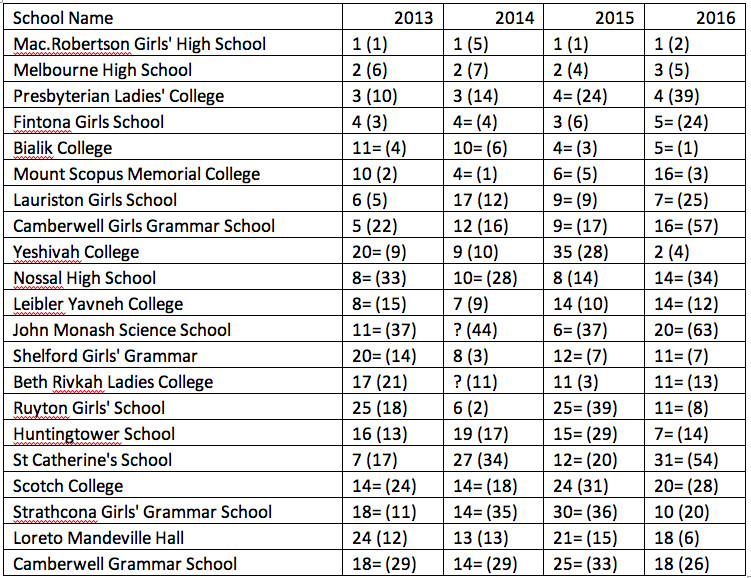

To be meaningful ,school rankings must account for scaling. For example, a ranking could be based on the percentage of students with a high composite ATAR score, like an ATAR threshold of 90 on the 0-99.95 scale, which is most commonly reported by the media. This ranking differs dramatically from that based on VCE40+, as Table 1 shows.

You can see from this table that some schools, particularly those in the public sector, are consistently disadvantaged by a VCE40+ rankings. This will happen to schools where subjects that are scaled up are popular.

The importance of subjects

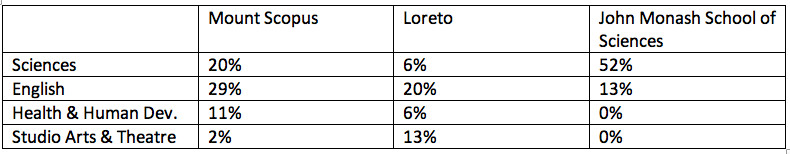

These bald rankings also obscure how these schools score in different subjects (see Table 2). John Monash Science School ranks much higher on ATAR90+ than on VCE40+ because it is strong in sciences, and sciences scores are commonly scaled up.

But we can also see that each of these three schools have different strengths, and neither a VCE40+ nor an ATAR90+ ranking captures that.

Looking at subjects is important, but clearly, there will always be a demand for single-number comparisons. Yet, if VCE40+ is meaningless, why pick ATAR90+ instead?

Rankings based on ATAR99+ or ATAR80+ are just as valid and may be more relevant to some people. But, unfortunately for single-number rankings, whether we pick 80, 90 or 99 matters a great deal for how a school performs in a ranking. Let’s compare non-government Loreto and Camberwell Grammar, which have the same rank in ATAR90+ (see Table 3).

Camberwell Grammar has a much higher fraction of students with scores above 99, which would rank it in among the top 5 to 7 schools in Victoria. Does that make Camberwell Grammar a better school than Loreto? Maybe not: Loreto has a noticeably lower fraction of students who score below 80, so which school ranks higher depends on the student’s needs.

Business & Economics

Academic underachievers make good after school

What we need is better data to make rankings more meaningful.

In most other states, rankings are based on ATAR. In Queensland, one can easily find rankings based on one of three numbers: ATAR 67+, 79+ and 91+. But we can do much better than that.

We have the MySchool website, which reports NAPLAN scores in each learning domain for each school. A similar report on subject scores, for each subject at every school, would greatly enhance parents’ understanding of schools’ strengths. Unlike NAPLAN, which is a snapshot of student performance on a particular day, VCE scores track performance over much longer time.

Even more useful may be a tool similar to DreamSchool Finder, produced by Boston Globe using government data in the US state of Massachusetts. Parents can enter a profile of criteria that matters to them – resources, student diversity, college preparation, English, Maths, or graduation rates. The Finder will then produce a list of schools that best match this profile.

There is no reason why we can’t have such a tool here in Australia to provide a more accurate picture of how our schools are performing.

Note: all numbers used in this article are publicly available.

Banner image: iStock