Politics & Society

Holding a black mirror up to artificial intelligence

We are increasingly relying on artificial intelligence for important decisions but we don’t know how those decisions are being made. We urgently need Explainable AI (XAI)

Published 23 August 2018

Imagine a person on trial for a murder they did not commit.

During sentencing, the judge invites an expert witness to advise on the defendant’s likelihood of re-offending. The expert claims to be 90 per cent certain the defendant will re-offend and is an ongoing threat to the community.

But the expert refuses to explain their reasoning due to intellectual property concerns. The judge accepts the expert’s argument and the defendant is jailed for life.

Case closed.

This scenario is not too far removed from what is already unfolding in US court rooms. In 2013, Eric Loomis of La Crosse in Wisconsin, was accused of driving a car that had been used in a shooting. Loomis pleaded guilty to evading an officer and no contest to operating a vehicle without the owner’s consent.

Politics & Society

Holding a black mirror up to artificial intelligence

He was sentenced to six years in prison, influenced by his COMPAS scale score; the Correctional Offender Management Profiling for Alternative Sanctions.

COMPAS is a machine-learning algorithm that estimates the likelihood of someone committing a crime, based on information about their past conduct. COMPAS considered Loomis a risk - but we don’t know why.

The algorithms that generate COMPAS decisions are considered intellectual property (IP) and so are not disclosed in court. Defendants and their lawyers cannot determine precisely why a judgement is made, and so cannot argue its validity.

Several studies have raised concerns around COMPAS assessments that correlate race with risk of repeat criminal behaviour, and indicate a higher likelihood of re-offending amongst African-American defendants.

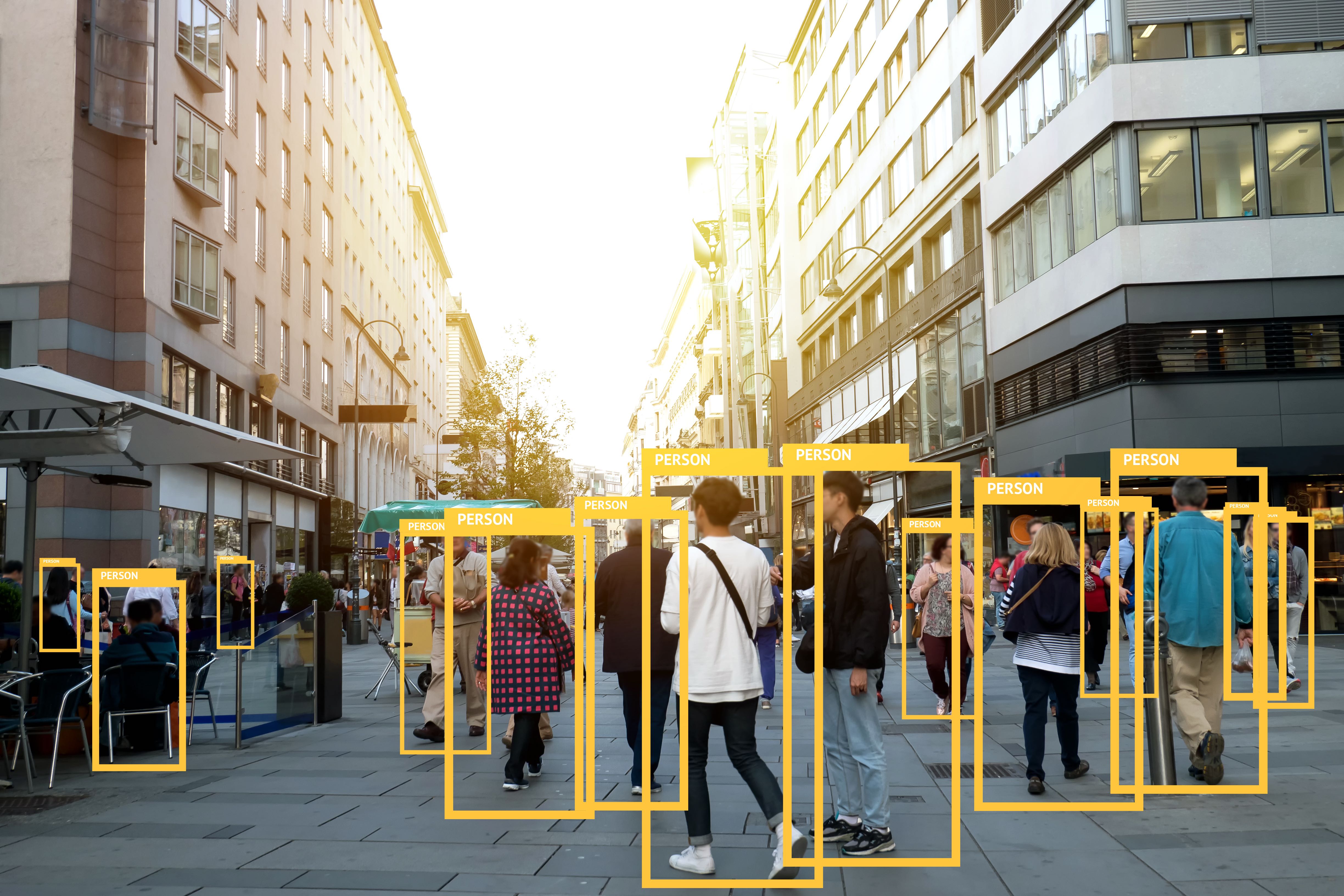

In Australia, New South Wales police use a similar system to proactively identify and target potential suspects before they commit a serious crime. In Pennsylvania in the US, another application aims to predict the likelihood of a child being neglected by its parents.

We’re now using Artificial Intelligence (AI) to predict people’s future behaviour in the real world.

A University of Melbourne research project, Biometric Mirror, illustrates how AI data could be captured and provided to third parties, like employers, government or social circles, irrespective of its flaws.

Sciences & Technology

Three ways we’re ‘making friends’ with robots

Biometric Mirror takes a photo of your face and holds up a foggy mirror to how society sees you. It rates a person’s psychometrics, such as perceived level of aggression, emotional stability and responsibility, and physical attributes, like age and attractiveness.

The mirror offers no explanation for these ratings – it’s simply based on how others judged a public data set of photos and how closely your face resembles those photos.

And it highlights the potentially disastrous consequences of placing blind trust in AI.

As AI becomes increasingly ubiquitous, understanding why decisions are made and their correctness, fairness and transparency becomes more urgent.

Explainable AI (XAI) aims to address why algorithms make the decisions they do, so the reasoning can be understood by humans. Society needs to trust the decisions and legitimacy of actions arising from these algorithms.

The EU’s recently-announced General Data Protection Regulation legislation appears to support this and has been interpreted by researchers as mandating a right to explanation for algorithmic decisions.

AI faces complex hurdles including understandability, causality and creating human-centred XAI.

XAI is currently driven by experts wanting to understand their own systems – it’s not motivated by trust or ethical dilemmas.

In the 1980s and 1990s, most AI applications contained knowledge about how to perform a medical diagnosis from symptoms or advise on mortgage loan suitability, for example, and this knowledge was encoded in a format understandable to other experts.

Sciences & Technology

Is any job safe from the march of the machines?

The researcher could provide a reasonable prediction about the output and trace the reasoning - like a software engineer debugging a standard computer program.

However, during the past five to six years, deep neural networks have dominated AI. These networks are so complex that, given a specific input and output, even an AI expert could not explain why an output occurred. So AI practitioners are interested in explainability to help them understand their own systems.

Causation indicates an event was the result of another event. Correlation is a statistical measure that describes the size and direction of a relationship between two or more variables.

For example, smoking is correlated with, but does not cause, alcoholism.

In AI, correlations can help make forecasts about future purchasing behaviours and likelihood of committing a crime but it does not explain causes and their effects. So it’s hard for us to identify with the outcomes because our brains are hard wired to understand cause and effect.

Most Explainable AI research looks at how to extract causes of decisions from specific models to provide insights for experts in artificial intelligence. This doesn’t help people understand why they are going to prison or why Biometric Mirror called them irresponsible or unattractive.

In a human exchange, a highly-skilled communicator will infer exactly the point of misunderstanding using the context of the conversation, the structure of the question, the mental model of the person and their level of expertise, and social conventions. They then come to a shared understanding of the reasons via a dialogue.

Most Explainable AI research neglects this. It leaves AI researchers to determine a ‘good’ explanation, but experts cannot judge what makes a good explanation for a non-expert.

Health & Medicine

4 ways tech can help your mental health

Our research into Explainable AI takes a human-centred approach. This collaborative research involves computer science, cognitive science, social psychology and human-computer interaction, and treats Explainable AI as an interaction between person and machine.

Truly Explainable AI integrates technical and human challenges. We need a sophisticated understanding of what constitutes Explainable AI; one not limited to an articulation of the software processes but that considers people at the other end of these responses.

XAI is not a panacea for all ethical concerns regarding AI. But explanatory systems that we understand and interact with naturally are a minimum requirement to establish wide-spread confidence in these algorithmic decisions.

Then maybe jailers in the US will be able to provide answers to the likes of unfortunate Eric Loomis.

Banner: Getty Images