Business & Economics

Will a robot take your job?

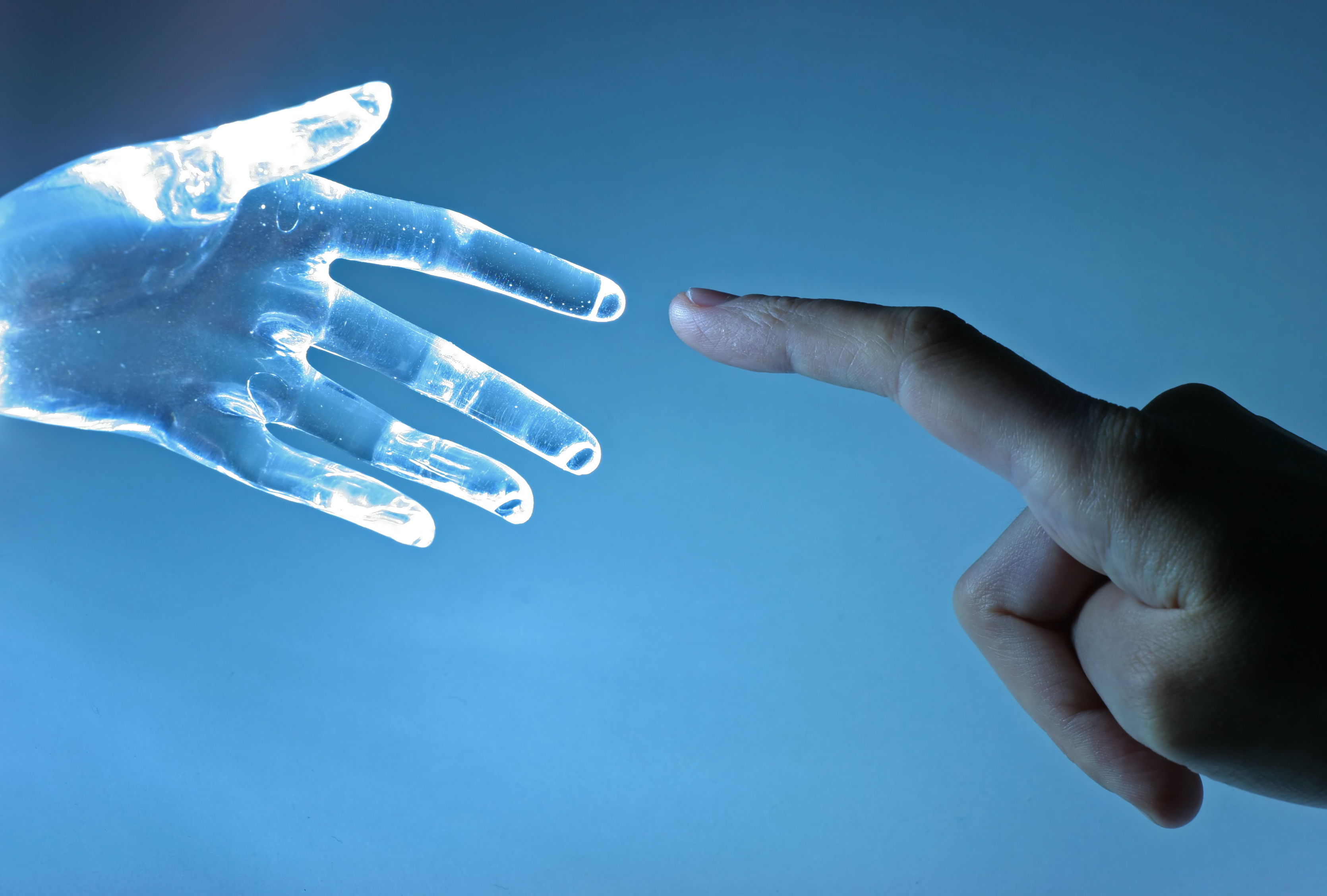

The rise of the machines has already evoked fears of robots taking over the world, but in reality the frontier of human-machine interaction is collaboration

Published 18 August 2017

As machines become more intelligent our relationship with them is changing, prompting calls from experts like Elon Musk and Stephen Hawking for greater, proactive regulation of artificial intelligence.

In July 2017 there was a much-hyped story about Facebook abandoning an experiment after two artificially intelligent programs appeared to be chatting to each other in a strange non-human language. Although the truth is little less scary – the researchers realised they had made an error in programming by not encoding a rule to speak in English, so the robots began chatting in a derived shorthand – it instills a sense of fear over an automated future.

While ‘the rise of the machines’ seems like the stuff of science fiction, robots can already perform intelligent tasks like tailoring how they respond to individuals, and organising themselves without direct human input.

“The challenges we’re now facing are about the interaction of humans and robots,” says Professor Chris Manzie, from the University of Melbourne.

Professor Manzie’s work at the Melbourne School of Engineering, with colleagues Associate Professor Denny Oetomo and Associate Professor Ying Tan, covers a range of projects where collaboration between humans and machines is central to meeting the challenges of the future.

Here are three examples of how they’re helping us to ‘make friends’ with robots.

Robotic arms are under development, where patients can move their prosthetic limb with the power of thought.

Rather than a simple mechanical prosthetic arm, a neuroprosthetic arm picks up the neural signals from muscles or nerve endings just above where the original arm was severed; it can respond to signals that originated in the brain and have travelled along the neural pathway.

While in the future robotic prosthetics will be able to read more complex neural signals coming directly from the brain, at the moment the technology is quite robust already reading from the nerve bundles in the muscle. The message has already been filtered through our system from our brains: one signal flexes a particular muscle, and another flexes a different muscle.

Patients who receive a robotic arm have to learn how to use it over time, just as an infant has to learn how to walk. But the learning isn’t a one-way street. The robotic arm is also responding and adapting to the person as he or she learns more about how to use the device, and a kind of learning circuit develops through this relationship.

“It’s an interface,” says Associate Professor Tan. “In the interaction it’s not just humans giving instructions to the robotics; the human is also adapting. It’s not just one directional communication, but two.”

Rehab robots are also on their way.

Associate Professors Oetomo and Tan are developing robots that can help people recover movement in their upper limbs following episodes like a stroke.

“In the case of rehabilitation, the robot is coaching the human to learn a task, such as reusing an arm,” says Associate Professor Oetomo.

Effectively designed to be assistants to clinical therapists, but ones you can access daily or as often as needed, the robots respond and track a patient’s response and progress, and partially assess, on an individual basis, what will make their learning optimal.

“If you give too much assistance the patient won’t try hard enough, too little and they’ll be frustrated and won’t want to learn either,” Associate Professor Tan explains. “The right balance is important. It’s what we call optimal assistance: enough so we are motivated to continue but not so much we feel we don’t need to try.”

The robot must be able to offer just the right amount of help.

“In the end, the robot needs to be programmed in a way that takes into account that for people, there’s no learning without error,” says Associate Professor Oetomo.

Taking trial and error into consideration, and ensuring that challenges are optimally graded, the researchers have developed a way to program the robots so that they appear to understand us, and respond to our individual needs.

This opens up a whole host of interactions we previously considered only possible within the human-to-human relationship. Interactions that rely on the capacity to be responsive, and even empathic, could become a feature of human-machine experiences.

While neuroprosthetics and rehabilitation aids work on the one-to-one relationship between human and machine, Professor Manzie and the team are also working on what’s known as swarm applications; human-machine collaborations with large groups of robots.

With ‘swarm’, one person controls multiple robots with a single command, and the robots collaborate with each other to fulfil the tasks at hand.

“Think about exploring a collapsed building,” says Professor Manzie, “It’s too dangerous for people to enter, so we might want to send in a team of robots. It’s important the cognitive load on the human doesn’t increase with the number of autonomous agents, which would happen if he or she had to operate each robot separately.”

“There’s inherent intelligence with these robots that assists with taking over some of the required cognitive load in operating a large number of robotic devices such as a swarm,” explains Associate Professor Oetomo.

“For example, a heterogeneous combination of ground and flying vehicles may be sent in, each carrying its own suite of sensors. Each robot is capable of something unique, and they have to decide within the team what’s optimal: a flying robot might survey a big pile of rubble, so a heavier robot carrying equipment can scale it optimally, for instance.

Business & Economics

Will a robot take your job?

“Instead of individual commands to each individual robots, you send in the team and they’ll coordinate it themselves.”

The robots collaborate with us, but also with each other.

The complexities of the human-machine interface are stretching the boundaries of engineering, and it’s evident in the multidisciplinary focus of the work.

In neuroprosthetics, the team works closely with medical peers such as Professor Peter Choong, Head of Department of Surgery, University of Melbourne at St. Vincent’s Hospital and Professor Mark Cook, Director of the Graham Clarke Institute; but there are other important collaborations, such as with lawyers and psychologists to help deal with some of the trust-related concepts of autonomous systems.

“We fully recognise that engineering is not going to solve these questions on its own, it’s going to have to be interdisciplinary effort. It’s a very exciting time,” says Professor Manzie.

And the patients who the neuroprosthetics team works with are very much part of the picture. One – who lost his arm – wants nothing more than to be able to raise a beer to his lips at the pub with his right hand as a mark of his recovery. Although it may sound straightforward, it isn’t simple, as there are so many muscles involved in the movement.

“Patients have a really important role, especially in helping us understand the real world challenges,” says Associate Professor Oetomo. “It’s the detail that keeps you up at night.”

Professor Manzie agrees, “It’s real world impact. It’s not just us engineers working in the lab.”

Banner image: iStock