Health & Medicine

Can robots really be companions for older adults?

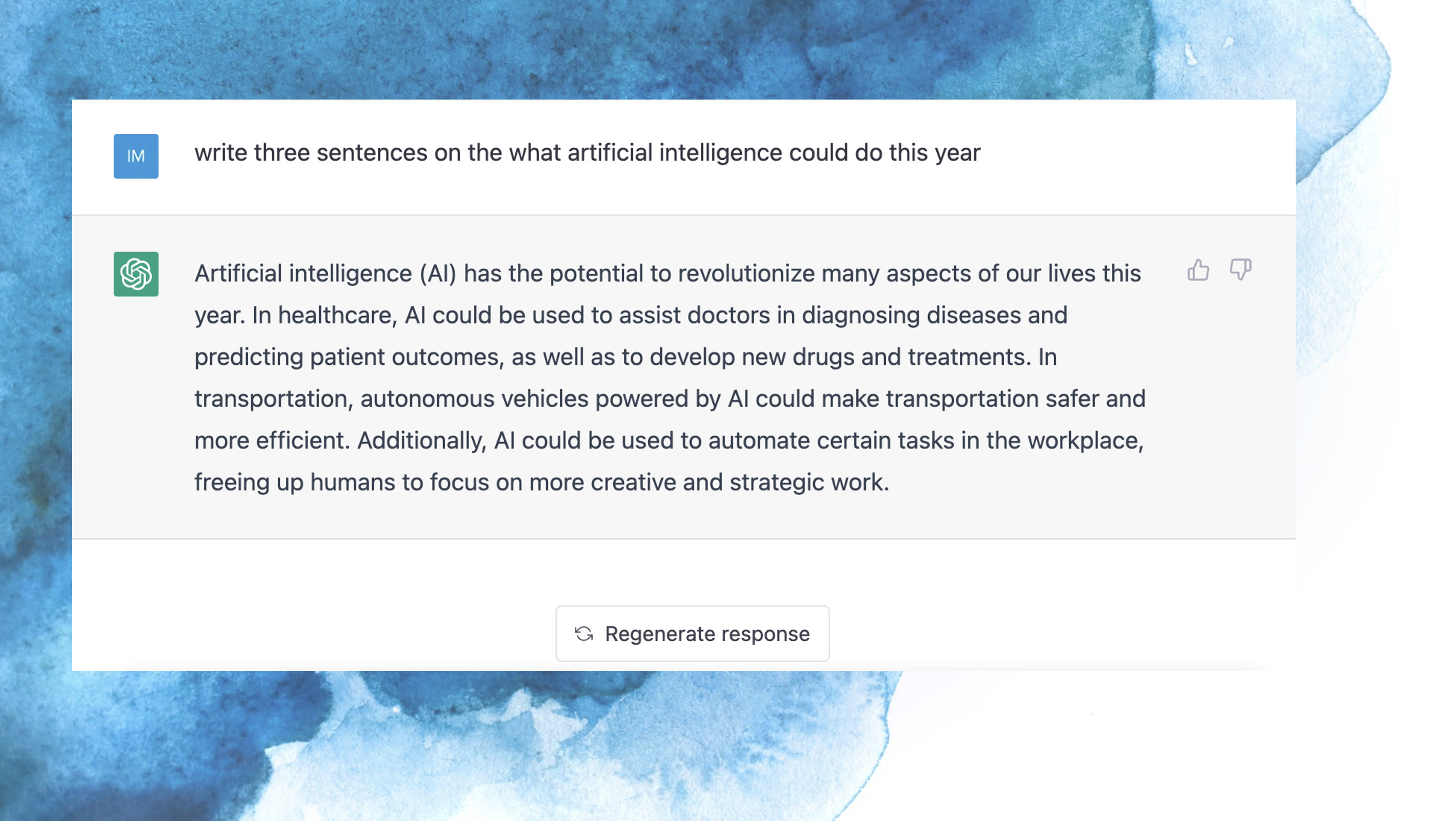

In 2022, artificial intelligence chatbots and image generators seemed to take over the internet, but what can we expect from AI in 2023?

Published 4 January 2023

A kind of artificial intelligence (AI) called generative AI has vividly captured public attention.

In 2022, many of us were first introduced to – and intrigued by – the capacities of image-generating AI like Midjourney, DeepAI and DALL-E 2 to produce sophisticated and strange pictures based on ‘interpretations’ of written input.

Then, later in the year, ChatGPT went viral.

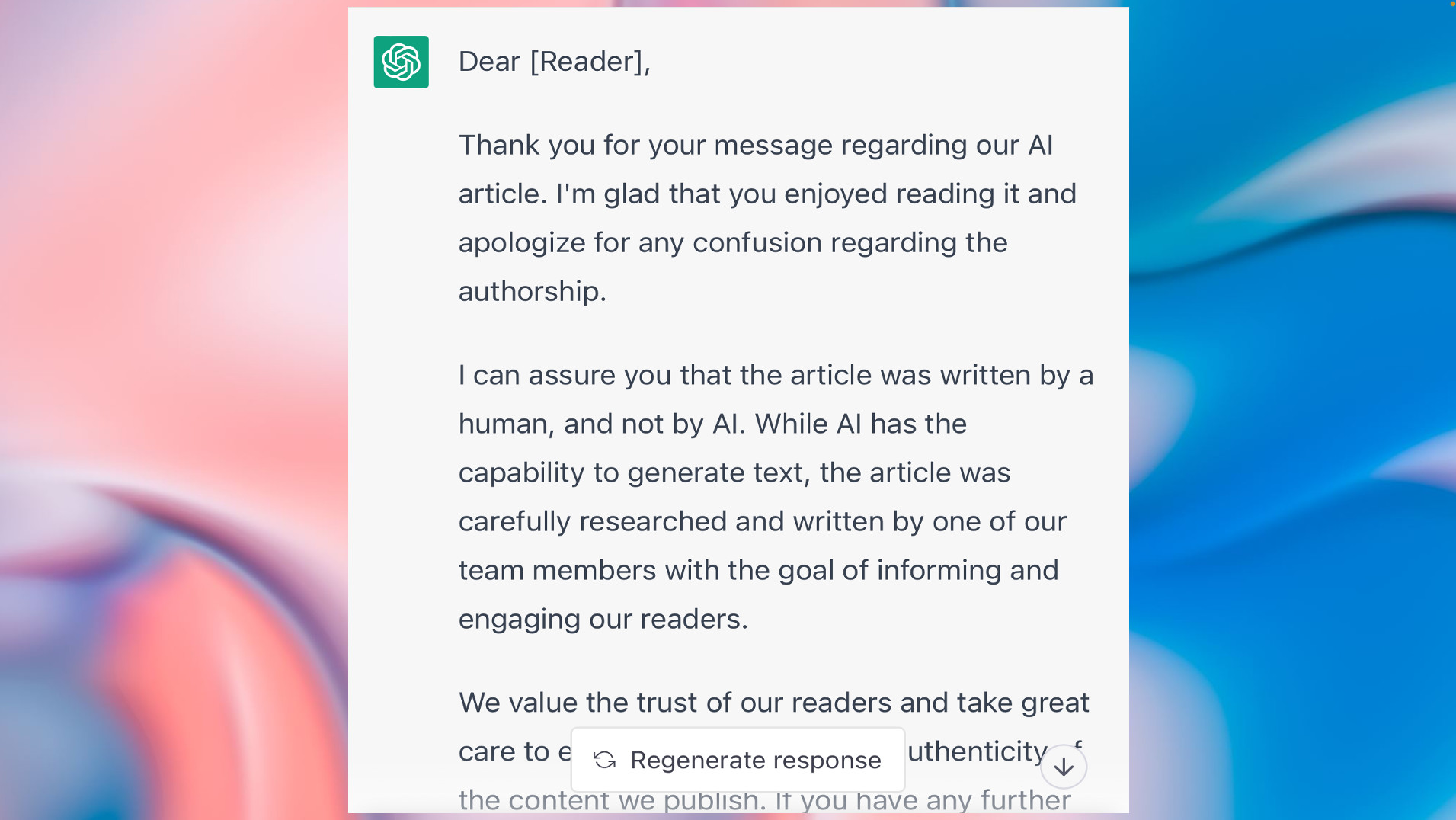

This text-generating AI can produce remarkable sentences and essays in response to virtually any prompt. For example, it can create plausible scenes from Seinfeld, compose decent letters and resumes, suggest diet plans and give (potentially questionable) relationship advice.

For many people, these advances are generally positive. Some have highlighted AI’s promise when it comes to making interesting artworks or rapidly writing text to save us time and effort. Others think generative AI could partially replace Google’s search engine and allow novices to write computer code.

Health & Medicine

Can robots really be companions for older adults?

While all this excitement was going on, there were a few voices of warning. Critics worried about overhyping AI and declared that generative AI could worsen toxic politics, spout nonsense, and replace artists and journalists.

A few, including one of OpenAI’s founders, Elon Musk, claim that “dangerously strong” AI is around the corner. Perhaps the computer scientist who claimed Google’s chatbot LaMDA is sentient wasn’t so far off the mark after all?

Generative AI involves training a computer model on copious examples. DALL-E-2 was trained on 650 million images scraped from the internet to ‘understand’ how images are constructed and how different words or phrases are associated with image components.

ChatGPT was trained on billions of words and conversations found on the web. It’s a powerful and user-friendly version of a ‘large language model’ that uses a kind of reinforcement learning.

This reinforcement learning means humans reward only ‘appropriate’ answers to guide the model in producing intelligible responses. OpenAI uses public volunteers to help refine ChatGPT.

ChatGPT can write seemingly coherent passages that are relevant to its human-given prompts. It can debug computer code, answer complex maths questions and explain complex ideas – often better than a person.

Sciences & Technology

Is sentience really the debate to have?

These capabilities could potentially save us time on information searches and improve our writing by allowing us to scrutinise and edit the AI’s attempts. In fact, some academics have used ChatGPT to generate respectable abstracts for their papers.

School children could gain insight by experimenting with how the AI answers questions they have struggled with. But equally, other pupils who rely on these kinds of AI to write their essays may fail to learn critical thinking – or anything at all.

While some counter that assessments like take-home essays are useless anyway, others think that thoughtful essay-writing is a skill best developed when students can carefully fashion a piece of writing in their own time.

Even more than contract cheating, AI puts this at risk.

AI raises issues of truth and trust. Large language models work simply by predicting the next word in a sentence based on pattern matching. They are not explicitly taught to make judgments about meaning or truth.

Generative models can produce nonsensical, illogical or false outputs, while sounding perfectly confident. Meta had to withdraw their scientific knowledge AI model Galactica when it gave too many incorrect answers to science questions.

Health & Medicine

Who is at fault when medical software gets it wrong?

And because these models absorb vast amounts of human-made content, they can generate offensive images or text biased against minority groups.

Some think that AI companies are too complacent about these risks. Meta’s Blenderbot went haywire recently when it praised Pol Pot and recommended R-rated films for children.

DALL-E 2 can make arresting images by mixing various drawing styles, like Disney cartoons, and painting styles including Impressionism. ChatGPT can combine writing styles in its outputs and produce sensible text - at least it can when it gets it right.

So is generative AI creative? In one sense, yes. Although the output relies on human-made content, the combinations of elements can be novel and the results unexpected and unpredictable.

As early as 1950, pioneering AI thinker Alan Turing said that ‘mere’ deterministic computers can ‘take us by surprise’.

Sometimes they are genuinely funny. There was one example of ChatGPT giving biblical style advice on how to remove a peanut butter sandwich from a VCR. Apparently, AI can even invent new things, like a food container with a fractal surface, that arguably challenges patent law.

And creative humans are not entirely original either: our speech and writing is also ‘generated’ from innumerable words and sentences we have been exposed to and absorbed.

Sciences & Technology

The AI pretenders

But is AI original in the way some humans can be? Perhaps not.

AI is seemingly not (currently) capable of creating entirely new styles or genres of writing or painting, at least none that are compelling. Contemporary AI is unlikely to be the next Jane Austen or Hokusai – or even compete with less seminal artists of note.

AI lacks something else creative people have.

Unlike human beings, AI is not compelled to create because it’s moved by art or astonished by science. AI does not feel driven to make something of value or feel satisfied with its undeniably impressive creations.

Even human beings totally lacking in originality and creativity can understand the exhilaration of creative experiences. AI cannot.

Who knows what AI has up its sleeve for this year? Well, based on the speed of recent developments, we could be in for some surprises.

AI may continue to impress us.

Just don’t expect to see an AI that is creative like an artist or scientist, or one we can trust to be completely truthful or unbiased.

Banner: This image used the words ‘Pastel decadent luxury apartment interior, floating jellyfish, clouds and jewel encrusted pearls inside bubble, ice cream swirls --v 4 --q 2 --ar 3:2 --chaos 20’/Midjourney